NLP - Text Generation, Summarization & Sentiment Analysis

This Pages contains details of multiple NLP experiments just for the understanding of different models present at

Huggingface. All of these experiments were done using

Huggingface pipeline. By just passing

The pipeline, which is the most powerful object encapsulating all other pipelines. The pipeline abstraction is a wrapper around all the other available pipelines.

It is instantiated by just passing the parameter to choose the other task-specific pipelines. Visit the Huggingface page for more details.

| Text-Generation | Text-Summarization | Sentiment Analysis | |

|---|---|---|---|

| Models | GTP-Neo-2.7B | BART-CNN | roBERTa |

| Pipeline | TextGenerationPipeline | SummarizationPipeline | TextClassificationPipeline |

| Fine-tuned | No | No | No |

| Web-Scraping | None | Articles | Tweets |

| BeautifulSoup | Twitter API & TweePy |

All of three experiment doesn't have model building, fine-tuning or training, just the demonstration and power of Transfer Learning and Huggingface's transformer pipeline.

Exp #1: Text Generation

For this task I wanted to use GPT-3 tranformer / attention based model that was trained on huge amount of data and had alot number of parameters, thats why it is a huge deal between the scientists but unfortunately GPT-3 has been not made public and is only accessible through Openai-API. So used GPT-Neo which is available on Huggingface, not the best as GPT-3 but was trained on more data then GPT-2.

When I tried using GPT-Neo which was trained on 2.7Billion text datasets and the models size was 10Gb, I came in to out of memory problem. Google Colab was crashing during the model loading process, So I had to downgrade the model and used GPT-Neo-1.3B based model. Other models are also available like GTP-Neo-6B at EleutherAI GTP-Neo.

Following are the model inferences:

| Prompt Text :: " What is the meaning of life? " | ||

|---|---|---|

| Output |

What is the meaning of life? And is it to be considered as an activity? Life's Meaning is not limited to Activities Life is not an activity but the most important meaning of life is the meaning of life itself. But we always thought like that, we used the word "determinism". But the definition of determinism is 'ability of being a deterministic system'. If a person cannot be a determinist by his mind itself,then this person cannot exist |

|

| Prompt Text :: " To love is to suffer " | ||

| Output |

To love is to suffer. Love, I believe, is the root of suffering. It is the passion for the experience of being in love. Love is a feeling that is difficult to describe but is ultimately an experience of feeling it in a way that cannot be described. Love is like a fire; a consuming consuming, burning passion that burns down mountains, burns through walls and burns out the sky. But the best thing in the world is to love. |

|

Exp #2: Text Summarization

For this task I used a text "Extractive Summarization" model to summarize the blog posts. For that I did web scraping using `BeautifullSoup` and the model I choose from the huggingface.co/models was BART-CNN which is the default in the summarization pipeline. Other models are also available at summarization models.

For web-scraping I used BeautifullSoup to extract specific portions from the web articles and then I converted them into chunks because passing entire chuck for the inference would have caused error because model of the NLP models are build and trained on specific max-token lengths which they can process at a time. So I converted the articles into 500 chunks.

Following are the model inferences:

Original Article Length: 649

Summary length: 69

| Article :: Understanding-LSTMs | ||

|---|---|---|

| Output |

Recurrent neural networks are networks with loops in them, allowing information to persist . These loops make them seem kind of mysterious, but they aren’t all that different than a normal neural network . LSTMs are a very special kind of recurrent neural network which works, for many tasks, much much better than the standard version . Long Short Term Memory networks are a special kind of RNN, capable of learning long-term dependencies . They work tremendously well on a large variety of problems, and are now widely used . The key to LSTMs is the cell state, the horizontal line running through the top of the diagram . The cell state is kind of like a conveyor belt, with only some minor linear interactions . LSTM has three gates, to protect and control the cell state . They are composed out of a sigmoid neural net layer and a pointwise multiplication operation . The first step is to decide what information we’re going to throw away from the state . In the example of our language model, we want to add the gender of the new subject to the state, so that the correct pronouns can be used . The previous steps already decided what to do, we need to actually do it . Not all LSTMs are the same as the above; almost every paper uses a slightly different version . The Gated Recurrent Unit, or GRU, combines the forget and input gates into a single “update gate” The resulting model is simpler than standard LSTM models and growing increasingly popular . A common opinion among researchers is: “Yes! There is a next step and it’s attention! ” The idea is to let every step of an RNN pick information to look at from some larger collection of information . Grid LSTMs by Kalchbrenner, et al. (2015) seem extremely promising . The last few years have been an exciting time for recurrent neural networks . |

|

Exp #3: Text Sentiment Analysis

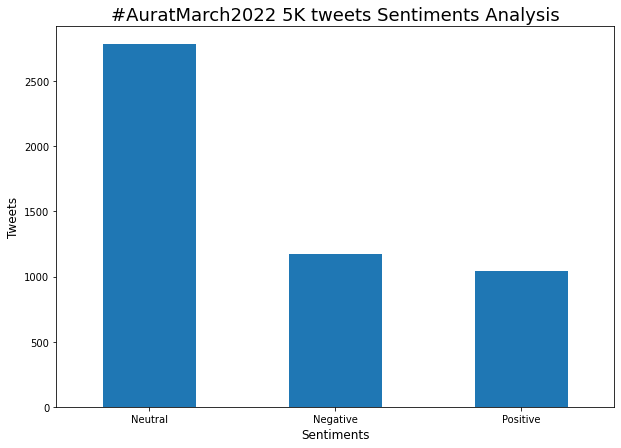

For this task I did two experiments because I wasn't satisfied with the results. First I used Huggingface pipeline to bring roBERTa a model model trained on ~58M tweets and finetuned for sentiment analysis with the TweetEval benchmark. This model is suitable for English. And for the second I build NaiveBayes model, which I trained on nltk corpus movie reviews.

Both of these models performed their inferences on tweets, which were downloaded using Twitter API and python tweepy library. I downloaded total 5000 tweets regarding a specific topic using hashtag #AuratMarch2022 to check the people's sentiment. But because tweets content were so different from the data on which these models were trained so model performed poorly with wrong classifications

Sentiment Analysis on 5000 tweets regarding #AuratMarch2022 using roBERTa models

Future Experiments

In future I am planing to use GTP-2 for text generation, and sentiment analysis from Yelp page, to use Abstractive Summarization with Pegasus, and building own model using papers.

To view the source code, please visit my Github page.